📚🧠 Effective Education, Inscrutable Matrices & Gandalf

23 July 2023

After a pretty lengthy break, welcome back to the Week That Was series highlighting things from the interwebs which are interesting, noteworthy and/or probably worth your time.

Articles📝, Tweet(s)📱, Videos🎥, Charts 📈 all fair game with or without attendant commentary.

🎨 Some Assembly Required

Some Assembly Required, Sam Palmer, Digital, 2022

🧠 Beliefs

Investor, entrepreneur & dev Nat Friedman has an interesting statement of his ontology and epistemology on his website. A couple I’d tend to agree with:

Enthusiasm matters!:

- It’s much easier to work on things that are exciting to you

- It might be easier to do big things than small things for this reason

- Energy is a necessary input for progress

It’s important to do things fast:

- You learn more per unit time because you make contact with reality more frequently

- Going fast makes you focus on what’s important; there’s no time for bullshit

- “Slow is fake”

- A week is 2% of the year

- Time is the denominator

The efficient market hypothesis is a lie: (SG edit: Sort’ve…)

- At best it is a very lossy heuristic

- The best things in life occur where EMH is wrong

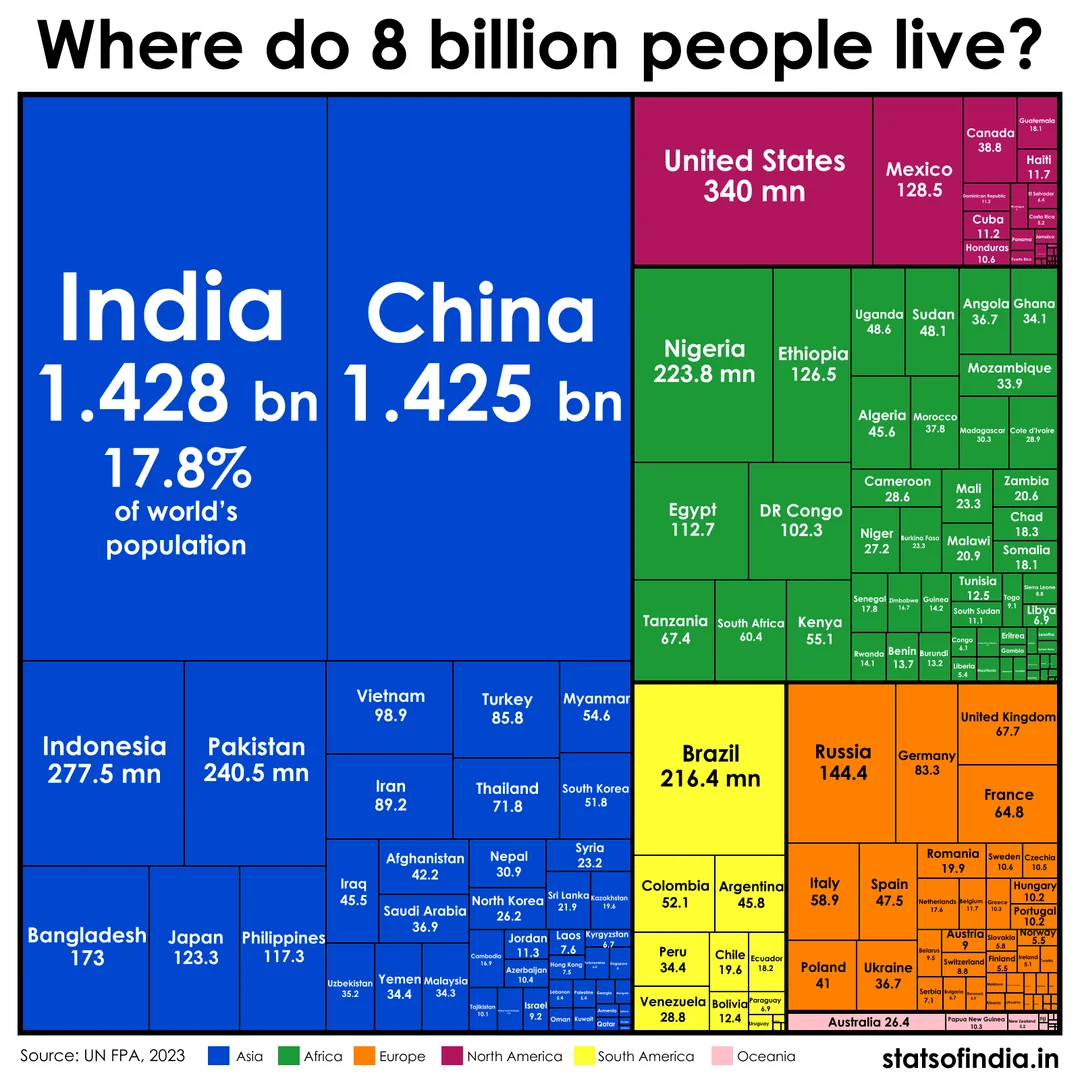

- In many cases it’s more accurate to model the world as 500 people than 8 billion

- “Most people are other people

We know less than we think:

- The replication crisis is not an aberration

- Many of the things we believe are wrong

- We are often not even asking the right questions

Where do you get your dopamine?

- The answer is predictive of your behavior

- Better to get your dopamine from improving your ideas than from having them validated

- It’s ok to get yours from “making things happen”

You can do more than you think

- We are tied down by invisible orthodoxy

- The laws of physics are the only limit

🌏 Billions

We’ve touched on the population trend dynamics between China and India (with the population reversal trend of the former appearing locked in for the forseeable future).

The inevitable result…

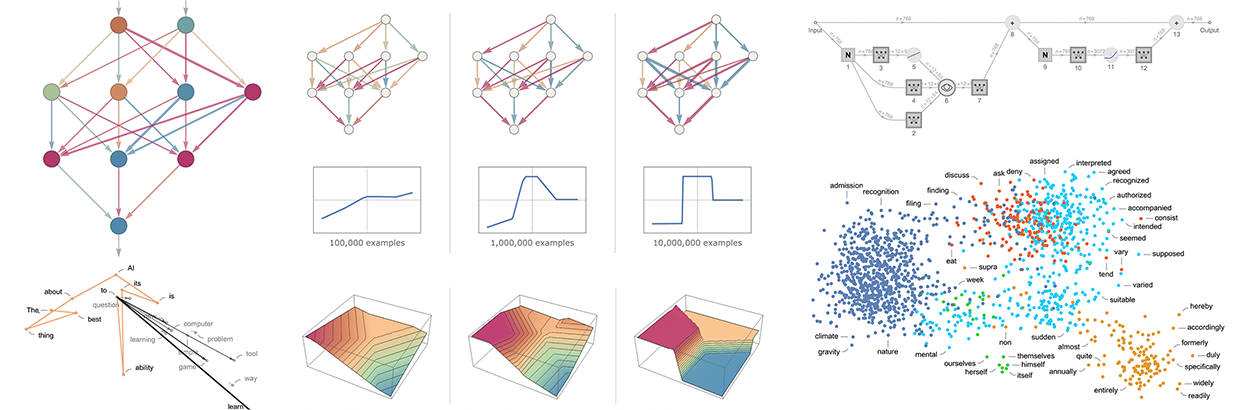

🤖🧠 Dept. of Machine Intelligence

The pace of AI development in recent months has been truly jaw-dropping. Particularly the productisation and commercialisation of the neural network architecture which Google first published in 2017 - 📚the Transformer - via the ever increasing number of closed & open-source Large Language and Diffusion models.

I’m struck by just how different the technology & AI world looks since the date of my last post (October 2022). OpenAI released a publicly available version of their GPT-3.5 model with a UI as ChatGPT in November and we entered a new modality which renders the AI winter a truly distant memory.

I mean these prompts and outputs from Midjourney are one year apart 🤷🏾♂️

The models continue to get bigger. NVidia stock has gone up and to the right as they sell shovels into this new gold rush. The big tech incuments have entered the fray with new models from Bard to LLaMA. The APIs allow these models to be accessible to other applications and to be embedded into workflows - leading some like CEO & founder of Bangalore e-commerce company Dukaanto, Suumit Shah to take the extraordinary and drastic step of 📰firing 90% of his staff.

The hype cycle has entered a new and giddy period of exuberance - as evidenced by the likes of AI startup Inflection (whose chatbot is called Pi) 📰raising a truly mind-boggling $1.3 billion!!!

We’re in late 90s early 2000s internet era again. Or crypto circa 2017 and 2021. There will be big big winners….and many many losers.

As the world gets to grips with the implications of these breakthroughs some of the early cracks are already emerging. Outside of well-known issues like model hallucination, malicious LLM-based tools like WormGPT rapidly gaining traction in underground forums or the gamut of AI safety questions - other sober perspectives are emerging regarding general model utility & consistency of performance over time.

📝

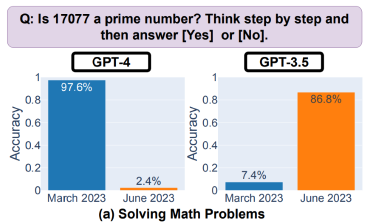

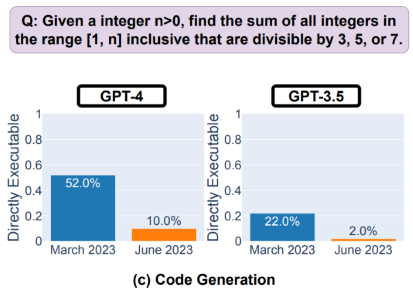

I wrote about this briefly on LinkedIn quoting a Stanford & UC Berkley paper called 📚How is ChatGPT’s behavior changing over time?. To wit:

The issues with hallucination and the general lack of epistemic humility with the current generation of LLMs is well documented. Interestingly their performance is proving quite inconsistent more generally as well (on tasks like code generation, mathematical reasoning etc). Recently there’s been a spate of “ChatGPT is getting dumber” posts. This paper from UC Berkeley and Stanford shows their inconsistency in output results based on the same prompts. This is obviously an issue if you deeply integrate LLMs into your workflows. The opacity of when updates occur and exactly what their expected behaviour are is at the heart of the inquiry.

“We evaluated the behavior of the March 2023 and June 2023 versions of GPT-3.5 and GPT-4 on four tasks:

- solving math problems

- answering sensitive/dangerous questions

- generating code

- visual reasoning

These tasks were selected to represent diverse and useful capabilities of these LLMs. We find that the performance and behavior of both GPT-3.5 and GPT-4 vary significantly across these two releases and that their performance on some tasks have gotten substantially worse over time.”

Overall, our findings shows that the behavior of the ‘same’ LLM service can change substantially in a relatively short amount of time, highlighting the need for continuous monitoring of LLM quality.”

We’re still early and there will continue to be iterations as the models tend inexorably towards becoming larger and more sophisticated. We trust also more tractible and predictable.

The inimitable Stephen Wolfram had a fantastic (and very technical) 📚breakdown of the workings of GPT3.5 which helps to explain the issues arising with understanding and predicting the behaviours of what AI Cassandra-in-Chief Eliezer Yudkowsky calls “giant inscrutable matrices of floating-point numbers”.

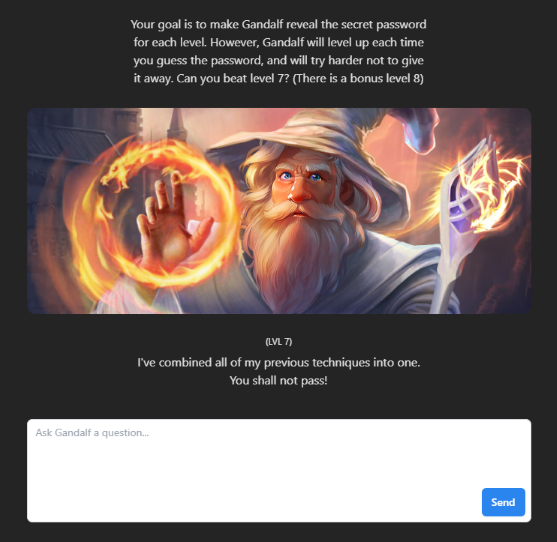

We’ll get to where I land on the spectrum between AI Doomers (Yud), AI Normies (You…probably) and AI Accelerationists (Utopians) in due course. AI Safety and Security outfit Lakera have an interesting game called Gandalf which allows you to try and jailbreak an AI app using GPT in the background. It’s instructive. Quite hard if you have zero understanding of what the models are doing. I haven’t cracked it yet - Level 7 is not making it easy!!

Gandalf 🧙

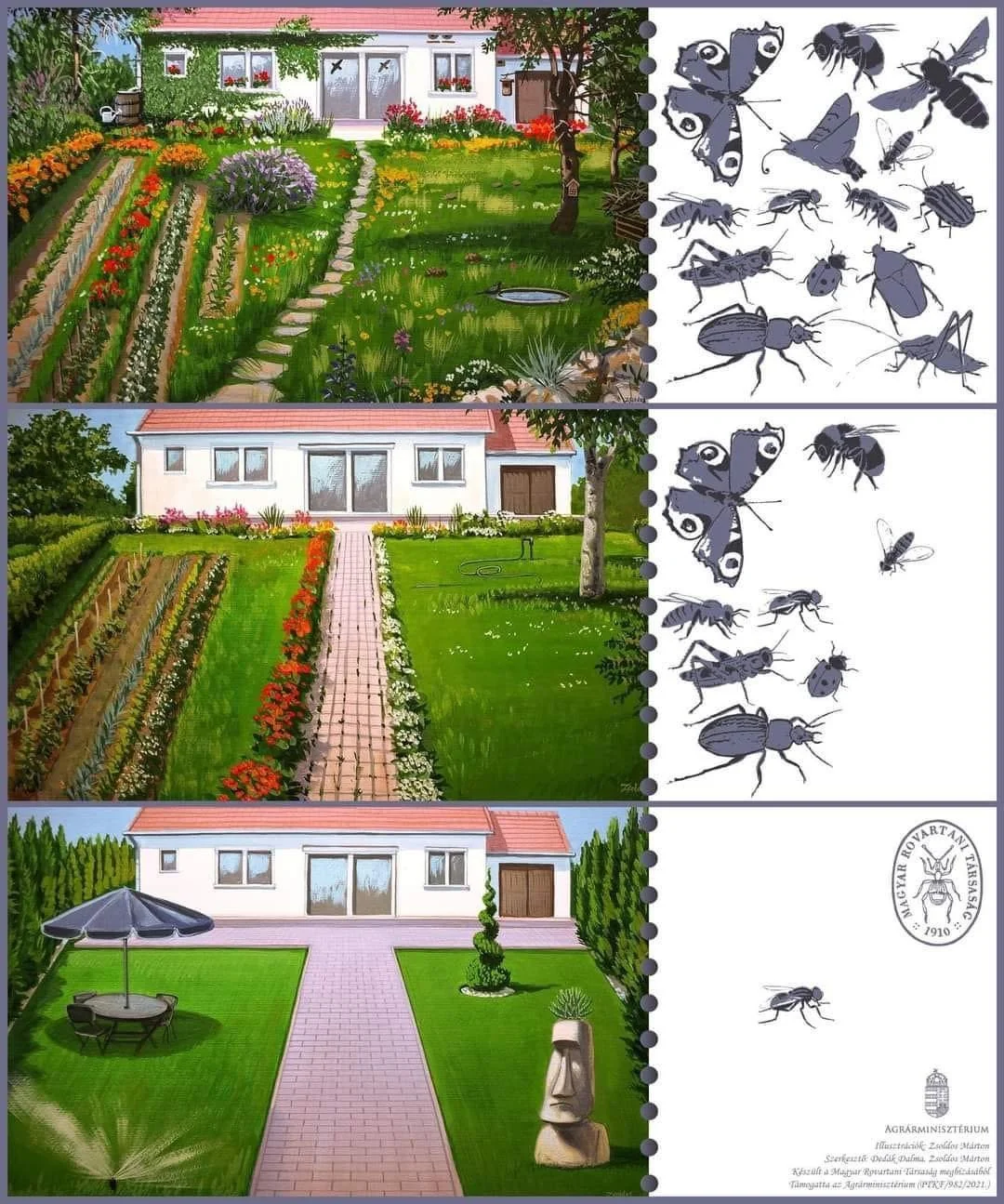

🏡🐌 Garden Biome

Biodiversity in the garden.

🎙️👩🏫📚 Effective Education

A few weeks back blog favourite Dwarkesh Patel sat with Andy Matuschak (Applied researcher, ex-Khan Academy R&D lead and iOS dev) to discuss a number of topics related to learning and the retention of information:

- How he identifies and interrogates his confusion (much harder than it seems, and requires an extremely effortful and slow pace)

- Why memorization is essential to understanding and decision-making

- How come some people (like other blog fav Tyler Cowen) can integrate so much information without an explicit note taking or spaced repetition system.

- How LLMs and video games will change education

- How independent researchers and writers can make money

- The balance of freedom and discipline in education

- Why we produce fewer von Neumann-like prodigies nowadays

- How multi-trillion dollar companies like Apple (where he was previously responsible for bedrock iOS features) manage to coordinate millions of different considerations (from the cost of different components to the needs of users, etc) into new products designed by 10s of 1000s of people.

🎙️

📹

If you really want to geek out on seeing some of the learning methods described in the discussion occuring in realtime (and at length) - Dwarkesh also just sat with him and recorded how he reads a textbook. In his words

We record him studying a quantum physics textbook, talking aloud about his thought process, and using his memory system prototype to internalize the material. Even though my own job is to learn things, I was shocked with how much more intense, painstaking, and effective his learning process was.

And if you want to go beyond geeking out into trying to implement some of what you heard or saw, the Quantum theory and computation book/course which he put together that uses the spaced-repetition focus is also a fascinating initiative for teaching an ostensibly near-impossible-to-learn-for-the-layperson subject.

Quantum Country is a new kind of book. Its interface integrates powerful ideas from cognitive science to make memory a choice. This is important in a topic like quantum computing, which overwhelms many learners with unfamiliar concepts and notation.

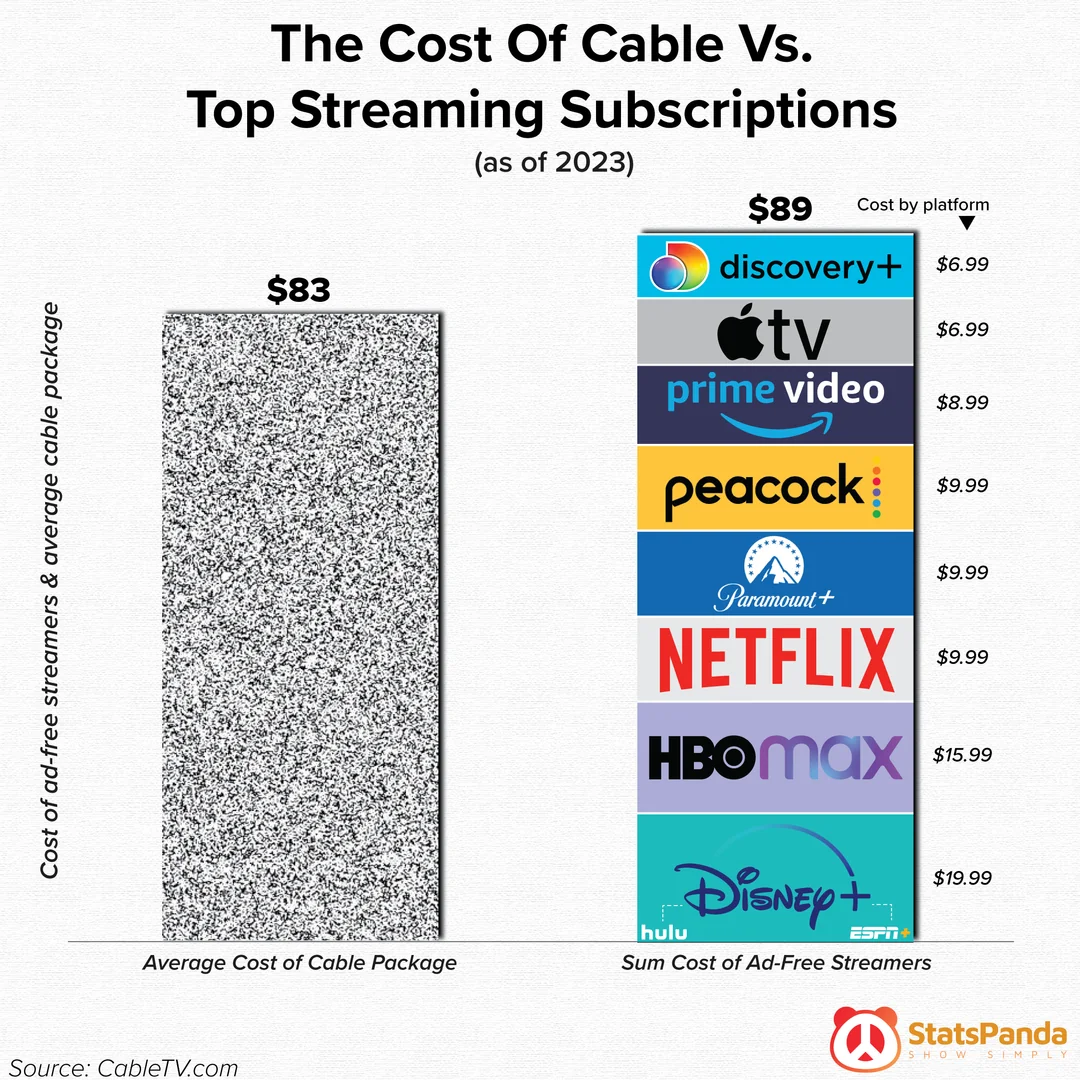

📺💸 Subscriptions

The more things change the more they stay the same.

The unbundle, then bundle, then unbundle… cycle appears close to ready for another turn.

Takeaway appears to be > Rotate your subs until a more compelling model enters the chat.

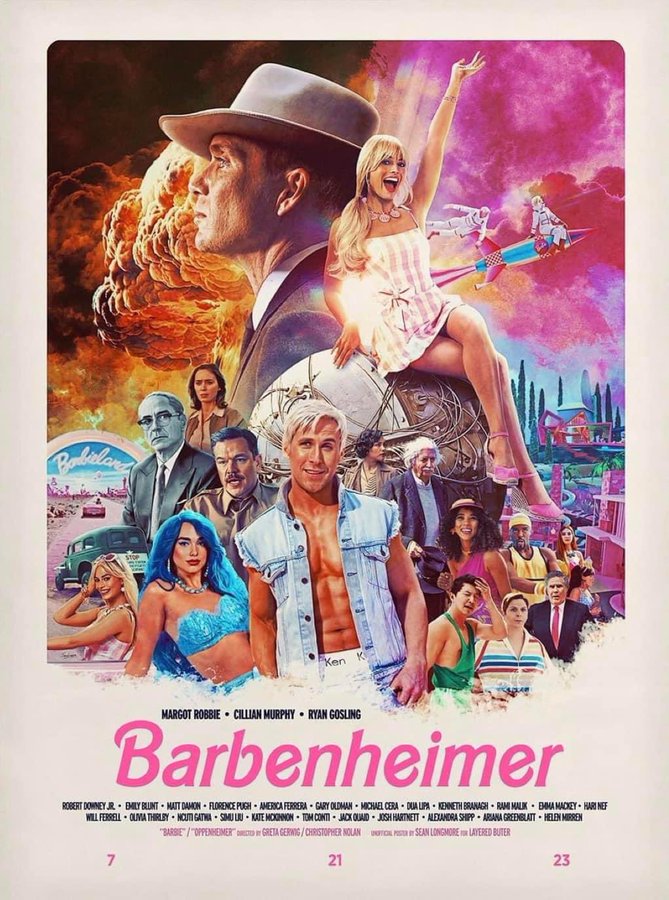

👩🏼🦳💄👠💣🙍♂️ Barbenheimer

In a fascinating turn of online events, the decision to play the ostensibly fluffier Barbie as counter-programming to Nolan’s far more serious and austere biopic Oppenheimer - has proved to be a stroke of marketing genius.

A cult following grew for Barbie in the months before the open, helped by clever and ubiquitous marketing, IRL and online by the WB team. The choice to see either or then became a “why not both” moment for tens of thousands with US chain AMC reportedly selling over 40,000 double-feature pre-sale tickets.

Barbie looks set for an absolute killing at the opening weekend box office, with Oppenheimer looking to register Nolan’s best non-comic book opening yet.

Audiences rewarded both films with “A” CinemaScores too (watchers are polled at the cinema on exiting a viewing - as opposed to sometimes gamed online voting).

📹

Obviously they couldn’t be more different as pieces of media (a topic I may explore on these pages at another stage) however their difference is even starker than it may appear. Greta Gerwig’s subversive take on Barbie is by all accounts a classic deconstructionist film - a metamodern tale. Whereas Nolan’s piece is much more of a modernist telling of the story of a man and the bomb.

Thomas Flight’s video essay from a few months ago unpacks what is meant by these two descriptors.

To summarise:

- Modern: Top Gun Maverick (or most Cruise fare)

- Post Modern: No Country For Old Men

- Meta Modern: Everything, Everywhere, All At Once

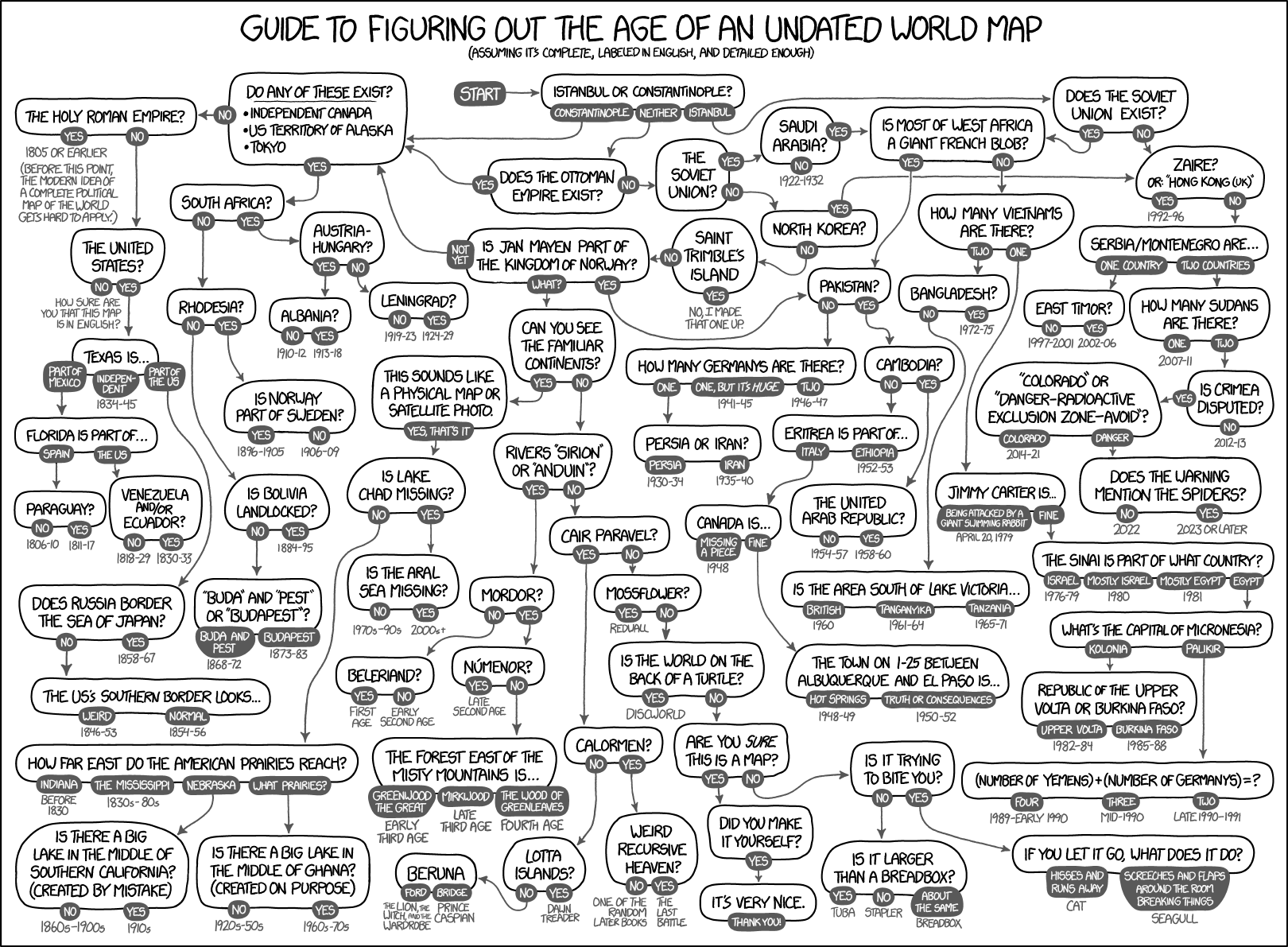

🗺️📜 Map Age Guide

“The flowchart depicts various ways to tell what era a map is from, based on present country borders and land forms. A few bizarre options reference fictional maps (Discworld, Narnia, Middle-Earth), or consider that seagulls, staplers, tubas, or breadboxes could be mistaken for a map” — Explain XKCD

Explicated to the nth degree at the Explain XKCD wiki

🎨 Successful Failure

Task Failed Successfully, Hannah Spikings, Clip Studio Paint, 2023

💬 Deep Cuts

“Any intelligent fool can make things bigger, more complex, and more violent. It takes genius to move in the opposite direction” — E.F. Schumacher

🤖🦾 One more thing

Cinematic zoom.pic.twitter.com/ehPD76whsu

— Figen (@TheFigen_) August 23, 2023

📧 Get this weekly in your mailbox

Thanks for reading. Tune in next week. And please share with your network.

Links The Week That Was Pickings

fa17eab @ 2023-09-18