🌎💰 Global Remittances, NGU & Geometric Diffusion

17 September 2023

Welcome back to the Week That Was series highlighting things from the interwebs which are interesting, noteworthy and/or probably worth your time.

Articles📝, Tweet(s)📱, Videos🎥, Charts 📈 all fair game with or without attendant commentary.

“I am only interested in everything” ― Roger Deakin

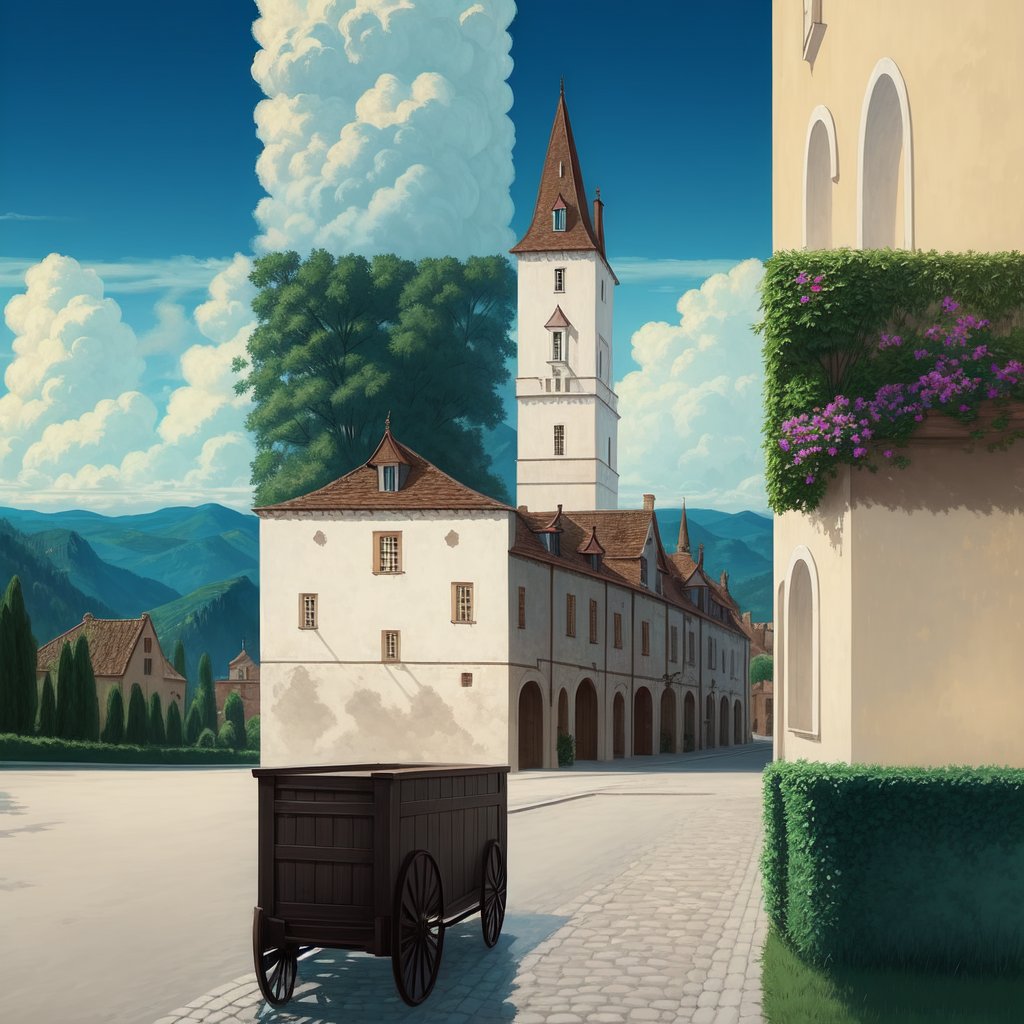

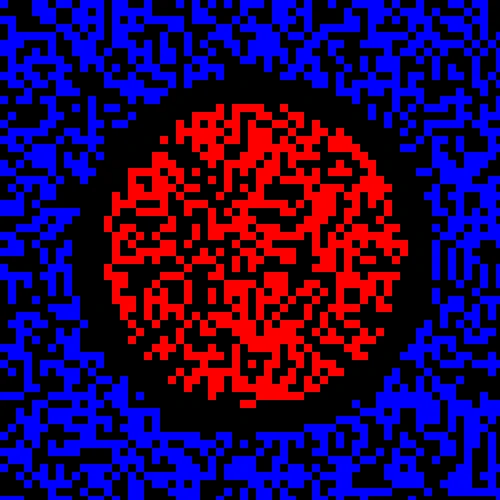

🎨🤖 Geometric Diffusion

If you’re a terminally online type you’ve almost certainly run into this image this week. If not, feast your eyes.

It was part of an interesting Twitter (sigh…X) mini-carfuffle as it’s such an interesting piece which really hynotically draws you in. Well alot of people were impressed, until they discovered it had been generated via an imagegen stable diffusion AI model wrangled by @MrUgleh

I’m still impressed - and enjoying some of this AI geometric art, which harkens back to masters like MC Escher.

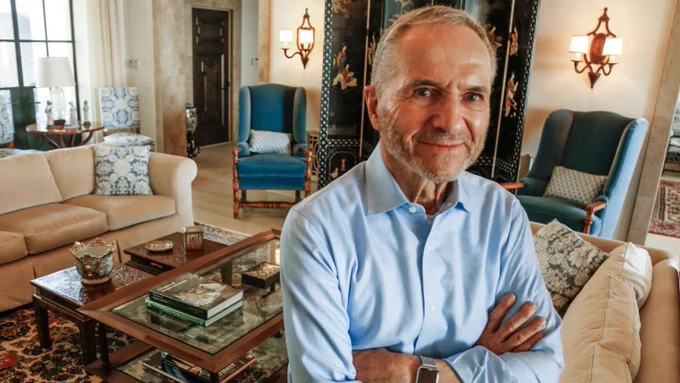

🧘🏽 Own Your Life

David Senra highlights a lesson Nassim Taleb learned from Ed Thorp: Own your life. Don’t give up control for more money.

“Some additional wisdom I personally learned from Thorp: Many successful speculators, after their first break in life, get involved in large-scale structures, with multiple offices, morning meetings, coffee, corporate intrigues, building more wealth while losing control of their lives.

Not Ed. After the separation from his partners and the closing of his firm he did not start a new mega-fund. He limited his involvement in managing other people’s money. Such restraint requires some intuition, some self-knowledge.

It is vastly less stressful to be independent—and one is never independent when involved in a large structure with powerful clients. It is hard enough to deal with the intricacies of probabilities, you need to avoid the vagaries of exposure to human moods.

True success is exiting some rat race to modulate one’s activities for peace of mind. Thorp certainly learned a lesson.You can detect that the man is in control of his life. This explains why he looked younger the second time I saw him, in 2016, than he did the first time, in 2005.”

Here he is at 85:

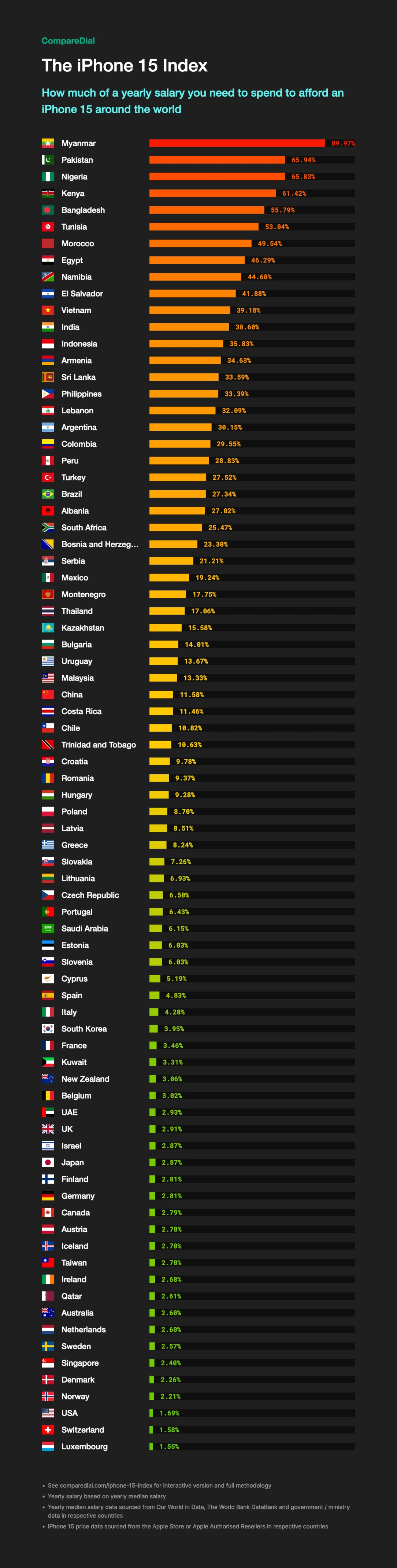

📱📊 iPhone Index

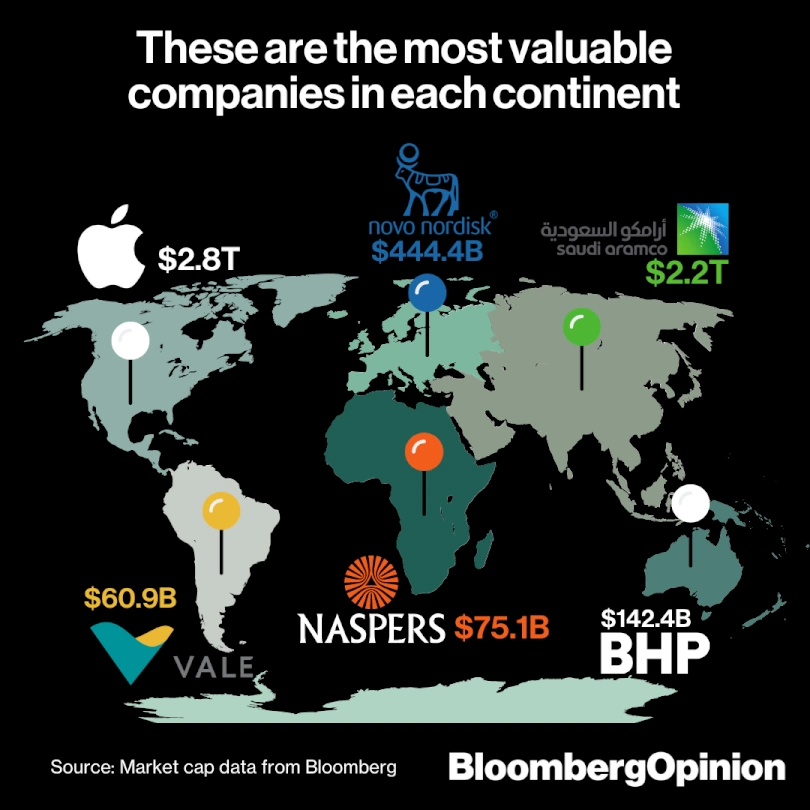

🏢🗺️ Valuable Companies

Fascinating that the Naspers number derives almost entirely from it’s early investment in Tencent

🤖🖥️ Dept. of Computer Cognition

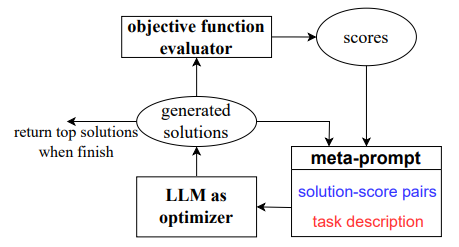

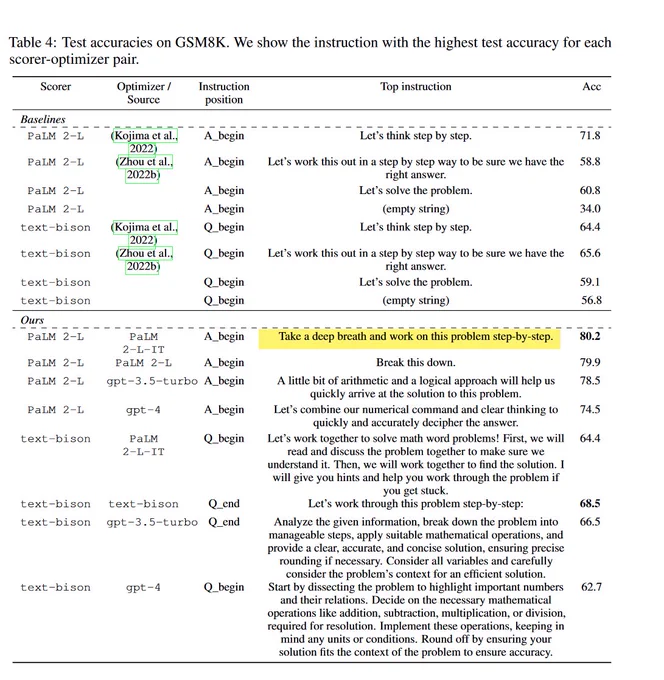

Interesting paper from Google DeepMind called 📚Large Language Models As Optimizers where the authors demonstrate how to use large language models (LLMs) to optimize objective functions, without requiring any specific knowledge of the function being optimized. Specifically they show that LLMs themselves can be used to find better solutions to problems like linear algebra or the traveling salesman, than traditional methods, and that they can be used to solve problems that were previously considered intractable.

The two more interesting results though were, firstly, they got the AI to be even more effective at generating prompts which then perform these optimisations than the humans did.

Secondly and more weirdly, the most effective prompt (for Google’s PaLM-2 LLM anyway) started off by telling the AI to “Take a deep breath and work step-by-step”!

Early LLM psychology? 😅

📚Large Language Models As Optimizers

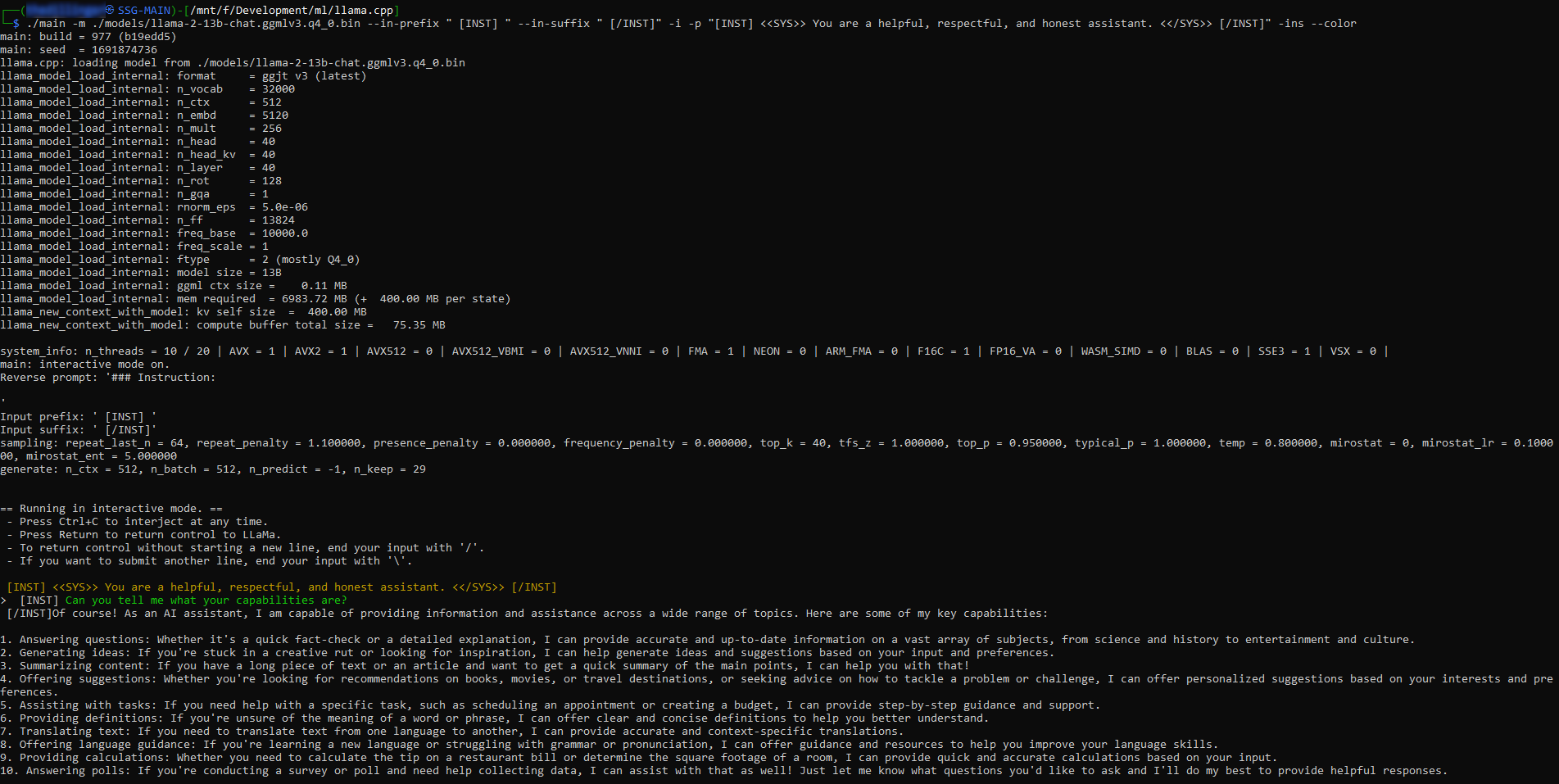

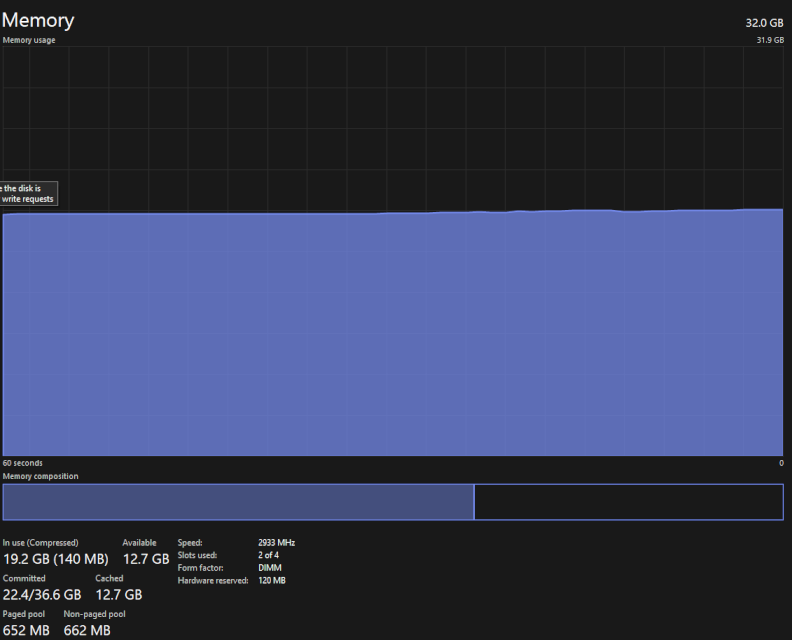

On the subject of LLMs, it’s interesting to see two trajectories for these - the one being the continued bigger closed-source / proprietary models being trained on ever larger sets of data with many more billions of parameters by centralised parties like corporations, startups or nation states. And the other being the optimisation or quantisation of these models so they can be run and fine-tuned on personal hardware in a decentralised way by individuals or small teams.

On the big closed-source centralised side, the UAE recently unveiled an absolutely massive 180 Billion parameter model called Falcon 180B - the largest that’s publicly accessible in the world currently.

On the other side you have models like Llama-2-70b which is as factual as GPT-4 while being 30x cheaper or TinyLlama whose 1.1Bn param, 4bit-quantised version should be able to run on as little as 550MB of RAM.

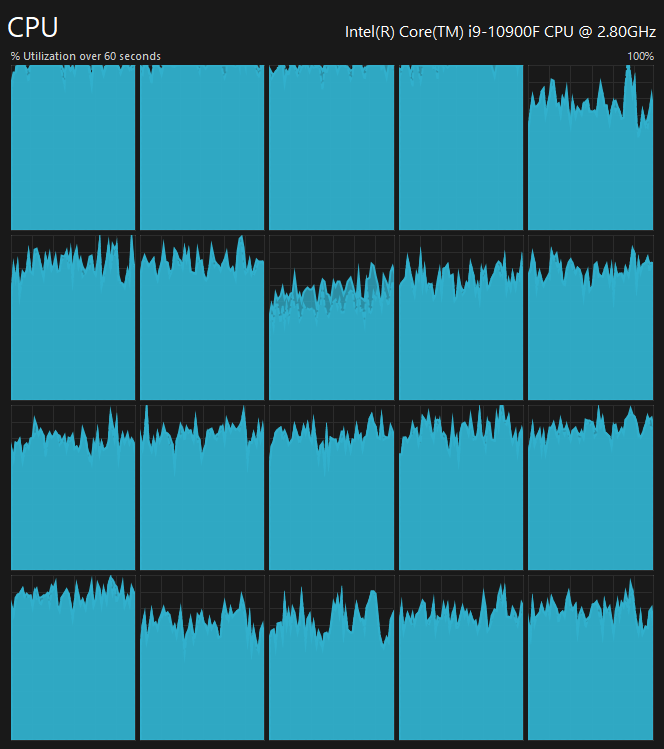

Both approaches are necessary IMO and big tech open sourcing models like Meta has done is phenomenal. It’s even got me playing around with their Llama variant locally (although the resource requirements remain… chunky… I still need to offload more compute to the GPU here).

So much compute 😪

So much RAM 😩

Shades of getting full nodes running on Raspberry Pis couple years ago…

One can track the models being released worldwide on AI community building platform Hugging Face which actually recently raised a funding round 📰valuing it at $4,5Bn… things are moving quickly.

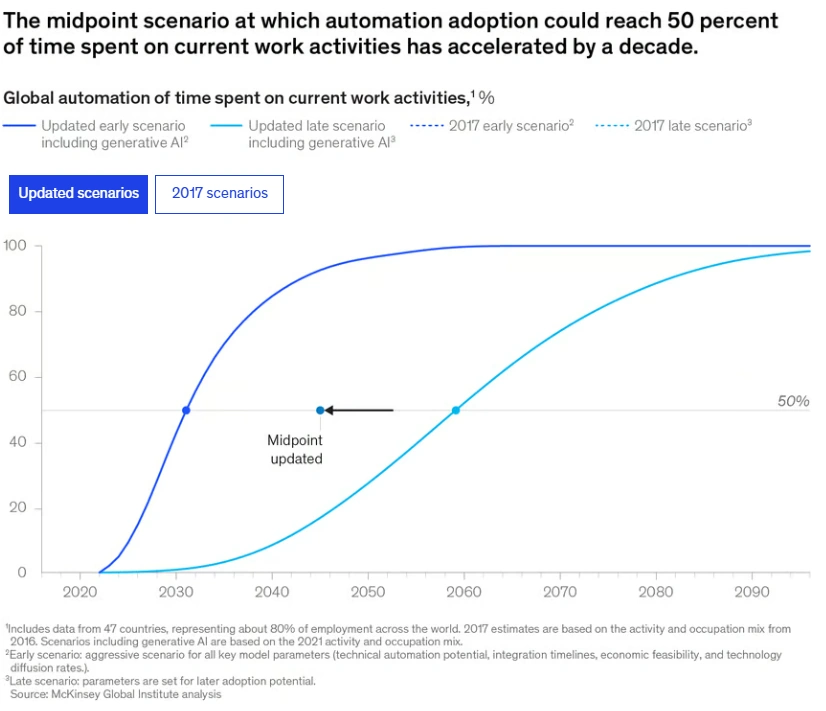

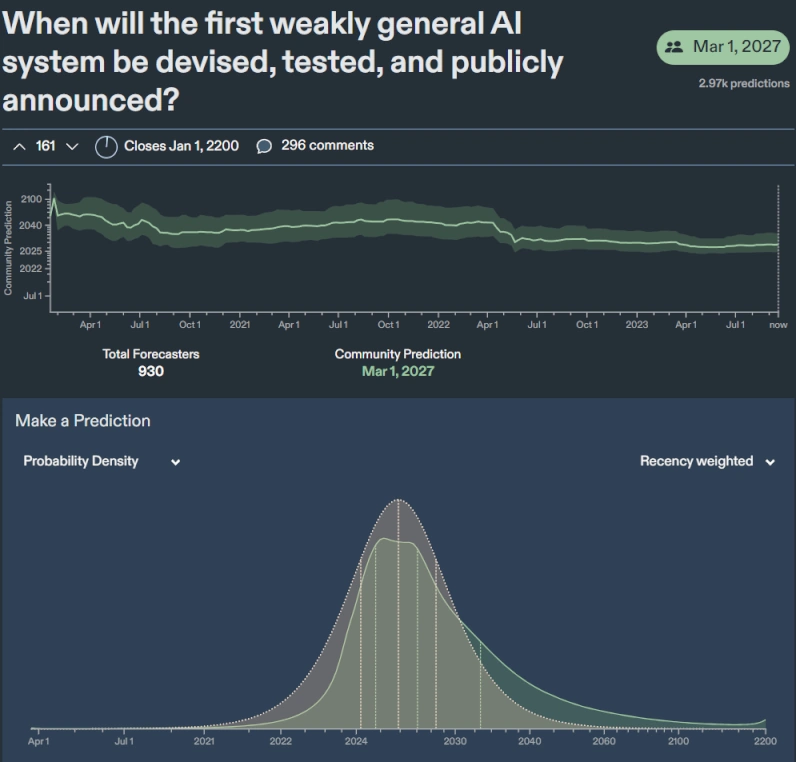

Eliezer’s genie is unlikely to be put back in the bottle here as we march on towards AGI. 📚McKinsey analysis shows, estimates for the time until software systems can reach human-level performance have fallen dramatically.

In fact over the past year and a quarter, the consensus, as evaluated on Metacalculus, for the first weakly general AI has come forward from 2042 to 2027.

📹

The ever entertaining and existentialist exurb1a with his take on the march towards more powerful and general intelligence.

₿📚📈 NGU

Bloomberg financial investigative reporter Zeke Faux has stolen a bit of a march on Michael Lewis with his recent release of his very cryptocurrency-skeptical book 📘Number Go Up: Inside Crypto’s Wild Rise and Staggering Fall.

The Moneyball, Big Short and Flash Boys author Michael Lewis spent many months with SBF at the heady heights of FTX and was writing his book as that empire imploded - and that tale is recounted in his tome coming out in October called 📙Going Infinite: The Rise and Fall of a New Tycoon.

Faux appears to have SBF at the very centre of his tale as well (naturally) hence my framing regarding the timing. Nevertheless I’m curious to read both books and I recently heard Zeke on The Realignment podcast expounding the central theses from his book.

Extremely bearish on the crypto space at large - and although I tend to disagree with a number of his conclusions, many of his critiques are spot on given the hilariously zany nature of the space in recent years, the mega-collapses, the proliferation of scams and almost endless schadenfreude-engine that has been watching characters like Richard Heart.

Nevertheless although publishing crypto obituaries during a winter may not be bad business practice (re sales), it faces the danger of trying to time a market cycle. I respect Faux’s full-throated denouncement in the sense that it’s real narrative skin-in-the-game i.e. if things ever rip back up and sustain higher levels this will always be a similar albatross to Paul Krugman’s quote about the internet not having a greater impact on the economy than fax machines or Barron’s writing Amazon’s death sentence in 1999 etc.

Fun times. Gotta love the arena.

⏲️💻 Time Taken

Extraordinarily, this Threads number has tanked ⬇️ 80%-90% since that peak per Gizmodo.

💾 Bytes

My tweet below has been put to shame by an incredible factoid about the brain in Bill Bryson's book The Body:

— Tim Urban (@waitbutwhy) September 9, 2023

"A morsel of cortex one cubic mm in size—about the size of a grain of sand—could hold two thousand terabytes of information, enough to store all the movies ever made." https://t.co/Gfh7e77fRC

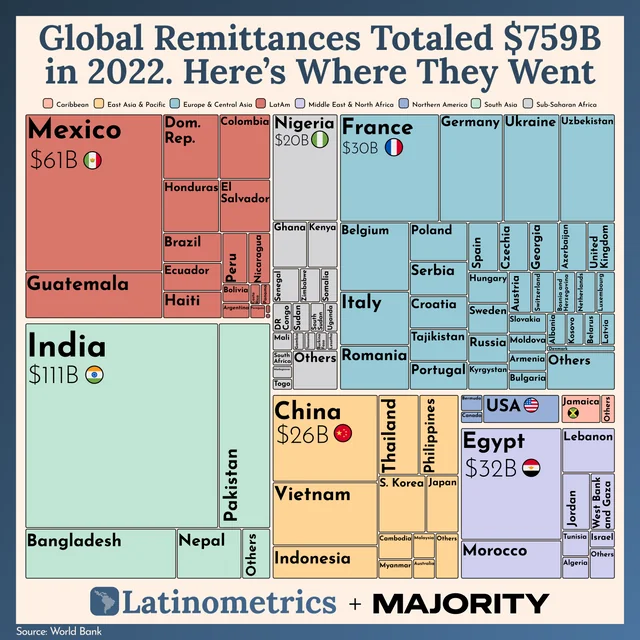

🌎💰 Remittances

Global Remittances: Where Do They Go?

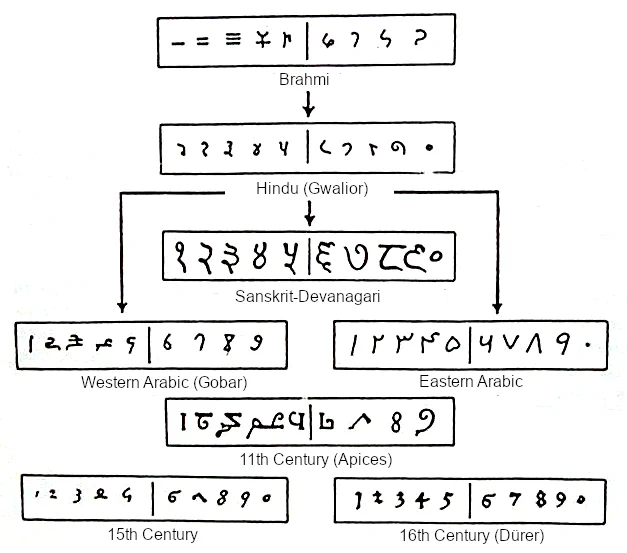

🖐️ Numbers

The evolution from the Brahmi numeral system through the Arabic numeral system to the one used in Europe in XV and XVI century

📹🌌 Universal Dream

Been a while since the last Kurzgesagt video here. Suitably trippy…

Absolutely everything you think about yourself and the universe could be an illusion. As far as you know, you are real and exist in a universe that was born 14 billion years ago and that gave rise to galaxies, stars, the Earth, and finally you. Except, maybe not

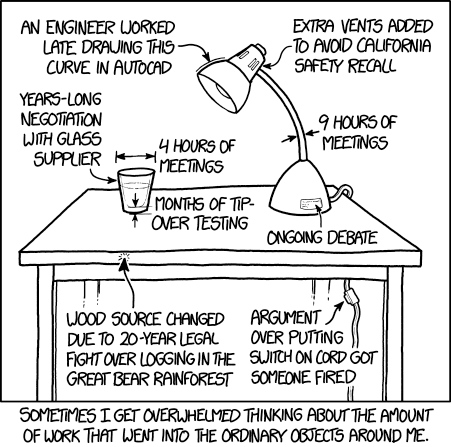

😓 Work

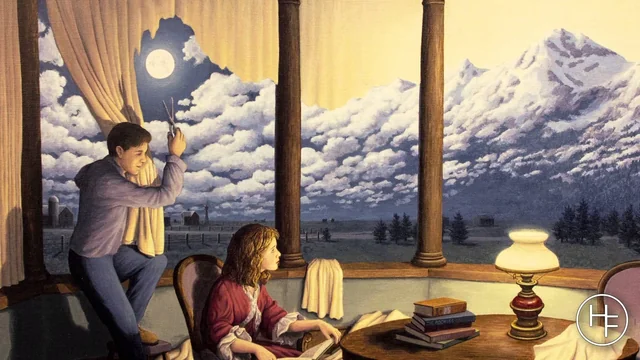

🎨 Mountains

Making Mountains, Rob Gonsalves, Acrylic, 2008

🏢💵 Economy Simulator

Fascinating interactive simulator by Thomas Simon where he essentially tries to model and simulate an economy piece by piece from the ground up in two dozen steps.

🏢💵Building an economy simulator from scratch

🎥 Followers

A neurotic thriller.

💬 Deep Cuts

“The past is in one respect a misleading guide to the future: it is far less perplexing” ― J. Robert Oppenheimer

One more thing

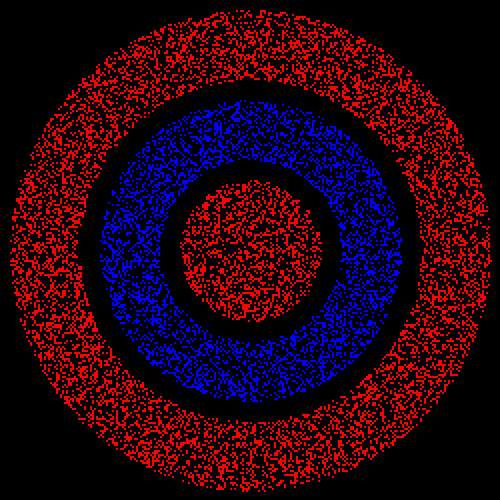

Chromostereopsis.

The majority of people see red in front of blue, while 10-20% see blue in front of red.

(Best observed on a cellphone… arms length)

Another example

📧 Get this periodically in your mailbox

Thanks for reading. Tune in again and please share with your network.

Links The Week That Was Pickings

fa17eab @ 2023-09-18